Individual Provider Metrics for the PAMM Method

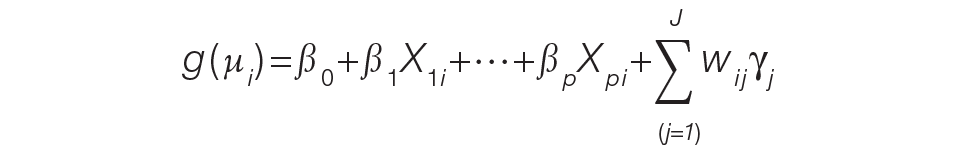

For the PAMM method, model-based metrics were derived using a MM model.8 Specifically, let J be the number of rotating providers in a health care system. Let Yi be an outcome of interest from hospitalization i, X1i, …, Xpi be fixed effects or covariates, and ß1, …, ßp be the coefficients for the respective covariates. Then the generalized MM statistical model is

(Eq. 2)

where g(μi ) is a link function between the mean of the outcome, μi, and its linear predictor, ß0, is the marginal intercept, wij represents the attribution weight of provider j on hospitalization i (described in Equation 1), and γj represents the random effect of provider j on the outcome with γj~N(0,σγ2).

For the mortality and readmission binary outcomes, logistic regression was performed using a logit link function, with the corresponding expected probability as the only fixed covariate. The expected probabilities were first converted into odds and then log-transformed before entering the model. For LOS, Poisson regression was performed using a log link function with the log-transformed expected LOS as the only fixed covariate. For coded patient experience responses, an ordered logistic regression was performed using a cumulative logit link function (no fixed effects were added).

MM Model-based Metrics. Each fitted MM model produces a predicted random effect for each provider. The provider-specific random effects can be interpreted as the unobserved influence of each provider on the outcome after controlling for any fixed effect included in the model. Therefore, the provider-specific random effects were used to evaluate the relative provider performance, which is analogous to the individual provider-level metrics used in the PAPR method.

Measuring provider performance using a MM model is more flexible and robust to outliers compared to the standard approach using OE indices or simple averages. First, although not investigated here, the effect of patient-, visit-, provider-, and/or temporal-level covariates can be controlled when evaluating provider performance. For example, a patient’s socioeconomic status, a provider’s workload, and seasonal factors can be added to the MM model. These external factors are not accounted for in OE indices.

Another advantage of using predicted random effects is the concept of “shrinkage.” The process of estimating random effects inherently accounts for small sample sizes (when providers do not treat a large enough sample of patients) and/or when there is a large ratio of patient variance to provider variance (for instance, when patient outcome variability is much higher compared to provider performance variability). In both cases, the estimation of the random effect is pulled ever closer to 0, signaling that the provider performance is closer to the population average. See Henderson15 and Mood16 for further details.

In contrast, OE indices can result in unreliable estimates when a provider has not cared for many patients. This is especially prevalent when the outcome is binary with a low probability of occurring, such as mortality. Indeed, provider-level mortality OE indices are routinely 0 when the patient counts are low, which skews performance rankings unfairly. Finally, OE indices also ignore the magnitude of the variance of an outcome between providers and patients, which can be large.

Comparison Methodology

In this study, we seek to compare the 2 methods of attribution, PAPR and PAMM, to determine whether there are meaningful differences between them when measuring provider performance. Using retrospective data described in the next section, each attribution method was used independently to derive provider-level metrics. To assess relative performance, percentiles were assigned to each provider based on their metric values so that, in the end, there were 2 percentile ranks for each provider for each metric.

Using these paired percentiles, we derived the following measures of concordance, similar to Herzke, Michtalik3: (1) the percent concordance measure—defined as the number of providers who landed in the top half (greater than the median) or bottom half under both attribution models—divided by the total number of providers; (2) the median of the absolute difference in percentiles under both attribution models; and (3) the Pearson correlation coefficient of the paired provider ranks. The first measure is a global measure of concordance between the 2 approaches and would be expected to be 50% by chance. The second measure gauges how an individual provider’s rank is affected by the change in attribution methodologies. The third measure is a statistical measure of linear correlation of the paired percentiles and was not included in the Herzke, Michtalik3 study.

All statistical analyses were performed on SAS (version 9.4; Cary, NC) and the MM models were fitted using PROC GLIMMIX with the EFFECT statement. The Banner Health Institutional Review Board approved this study.